Latest News

January 1, AI Startup Battles Deepfake Fraud With Cutting-Edge Voice Authentication

A startup claims it can help protect millions of people from losing money to artificial intelligence-enhanced robocall scams. The dawn of accessible generative AI has increased the fear of scammers tricking victims into giving over their private information by creating deepfakes, or replicating the voices of people with the intent to deceive.

Pindrop, a startup dedicated to voice authentication, claims to have the know-how and technology to verify a user’s identity and alert customers to content made by AI programs.

Vijay Balasubramaniyan, Pindrop’s CEO, appeared before Congress on Wednesday to speak on the threat of deepfakes and the guardrails that Congress needed to implement to combat them. While many of the panelists were focused on “superintelligence” or on political misinformation, Balasubramaniyan emphasized the immediate detrimental effects of deepfakes.

“Fundamentally, the point was that deepfakes break commerce because businesses can’t trust who’s on the other end,” Balasubramaniyan told the Washington Examiner.

“Is it a human or machine? Deepfakes break information because you can’t trust if it was Sen. [Chuck] Schumer saying something or Tom Hanks selling dental plan ads or not. And then deepfakes break all communication because if I’m a grandparent and don’t know if it’s my grandkids, I don’t know who to trust.”

Pindrop was founded in 2015 by Balasubramaniyan, Ahamad Mustaque, and Paul Judge in an attempt to adapt the CEO’s 2011 Ph. D. thesis into an actionable product. Balasubramaniyan completed his graduate work at the Georgia Institute of Technology, where he focused on identifying features in voice calls that could verify a user’s identity.

The CEO discovered several audio characteristics that can help make a voice “fingerprint” that can identify whether it is real. For example, it can determine whether a call is coming from the phone of a particular user, or it can identify elements in the sound that can only be replicated by the “shape of your entire vocal tract,” the CEO said.

The startup is in its early years but has partnerships with several Big Tech companies, including Google Cloud and Amazon Web Services, the company said. Pindrop was unwilling to say how many users it had, but it claims to be working with eight of the top 10 banks and credit unions in the United States, 14 of the top 20 insurers, and three of the top five brokerages.

Pindrop’s software has also analyzed over 5.3 billion calls and prevented $2.3 billion in fraud losses, a spokesman told the Washington Examiner.

Pindrop is only used by commercial companies, but Balasubramaniyan said he spoke with several members of Congress about the government using its services.

AI is a quickly changing field, making it difficult for AI “fact-checkers” to keep up. Pindrop says that it’s stayed up to date reasonably well. When Microsoft released the VALL-E large language model, Pindrop claimed it could identify the software 99% of the time.

Deepfake-based fraud has been a long-standing problem. The technology was considered before Congress over the summer when the Senate Judiciary Committee hosted a hearing on AI and Human Rights. Jennifer Destefano appeared before the committee, where she told the story of how she was nearly extorted out of $50,000 by scammers using an AI-generated vocal clone of her daughter.

“It wasn’t just her voice, it was her cries, it was her sobs,” Destefano told Congress. The mother nearly fell for the call until her husband told her their daughter was safe and with him.

Seniors have been specifically targeted with deepfakes. Sen. Mike Braun (R-IN) sent a letter to the Federal Trade Commission in May warning of voice clones and chatbots allowing scammers to trick elderly citizens into thinking they’re talking to a relative or close friend.

“In one case, a scammer used this approach to convince an older couple that the scammer was their grandson in desperate need of money to make bail, and the couple almost lost $9,400 before a bank official alerted them to the potential fraud,” the letter read.

Businesses have also reported being hit by fake voices, with 37% of global companies reporting that they were hit with attempts to access their websites with counterfeit voices, according to a survey by the ID verification service Regula.

Why It Matters (op-ed)

The rise of deepfakes poses a significant threat to our society, not only in terms of political misinformation but also in the realm of commerce. AI-generated voices have the potential to deceive millions of people, leading to massive financial losses and a breakdown of trust in communication.

Pindrop’s voice authentication technology could be a vital tool in combating this issue. By verifying users’ identities and alerting customers to AI-generated content, we can protect individuals and businesses from deepfake fraud. It’s crucial that we invest in solutions like Pindrop to stay ahead of rapidly advancing AI technology and maintain the integrity of our communication systems.

As our loyal readers, we encourage you to share your thoughts and opinions on this issue. Let your voice be heard and join the discussion below.

-

Entertainment2 years ago

Entertainment2 years agoWhoopi Goldberg’s “Wildly Inappropriate” Commentary Forces “The View” into Unscheduled Commercial Break

-

Entertainment2 years ago

Entertainment2 years ago‘He’s A Pr*ck And F*cking Hates Republicans’: Megyn Kelly Goes Off on Don Lemon

-

Featured2 years ago

Featured2 years agoUS Advises Citizens to Leave This Country ASAP

-

Featured2 years ago

Featured2 years agoBenghazi Hero: Hillary Clinton is “One of the Most Disgusting Humans on Earth”

-

Entertainment2 years ago

Entertainment2 years agoComedy Mourns Legend Richard Lewis: A Heartfelt Farewell

-

Featured2 years ago

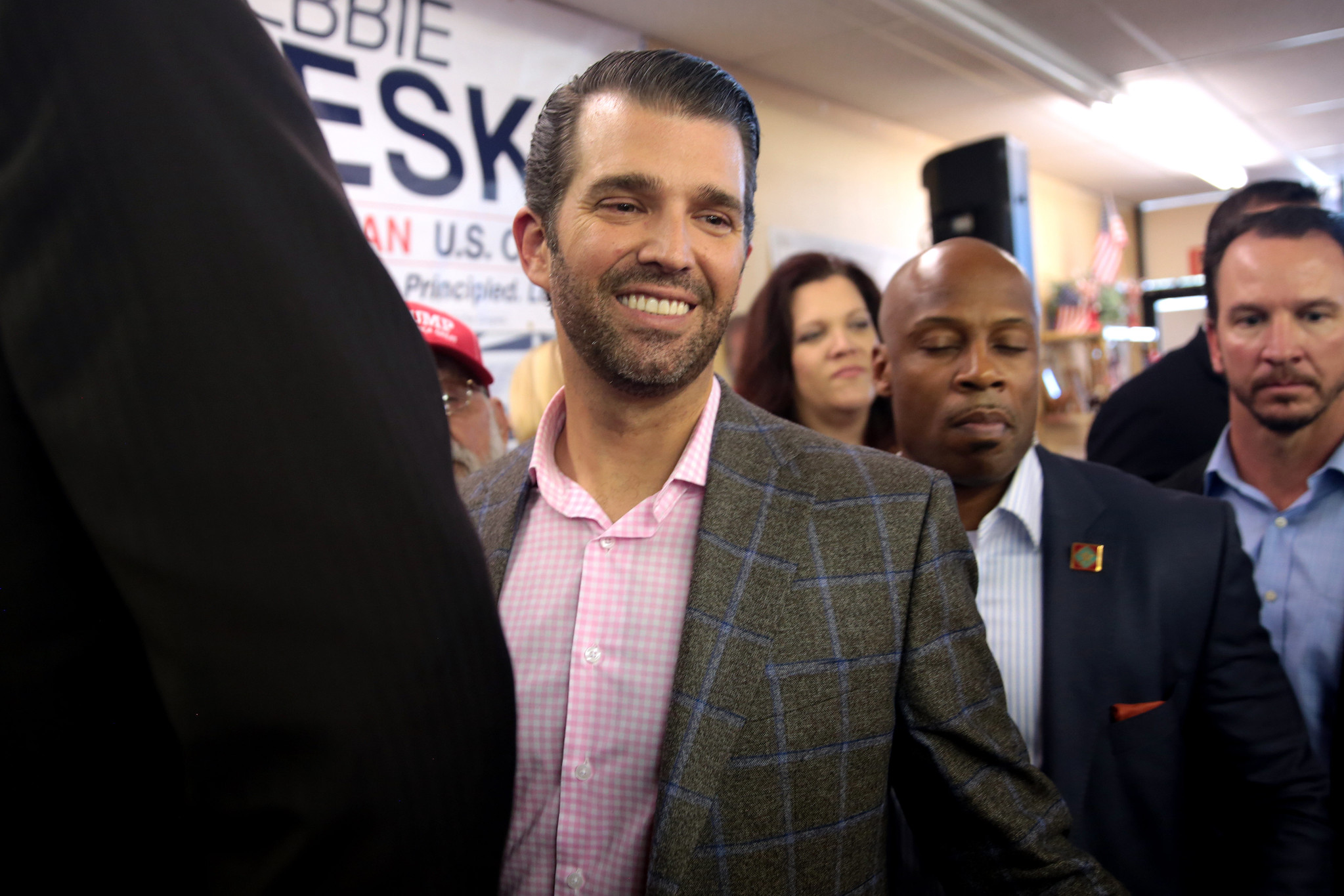

Featured2 years agoFox News Calls Security on Donald Trump Jr. at GOP Debate [Video]

-

Latest News2 years ago

Latest News2 years agoNude Woman Wields Spiked Club in Daylight Venice Beach Brawl

-

Latest News2 years ago

Latest News2 years agoSupreme Court Gift: Trump’s Trial Delayed, Election Interference Allegations Linger